Ich möchte zur Anregung noch einige weitere bestehende Anforderungs-Sammlungen vorstellen:

- Inclusive Design Principles

- Ethical Design Manifesto

- Small Tech Principles (ausgenommen die Texte zu Personal und Non-commercial)

- Fair Web Services

- User Data Manifesto 2.0

- IndieWeb principles

Ich gebe sie im Folgenden wieder, da ich es wichtig finde, bereits bestehende Bestrebungen in dieser Richtung anzuhören. Einen Blick wert sind darüber hinaus vielleicht die Principles of Humane Technology.

Ensure your interface provides a comparable experience for all so people can accomplish tasks in a way that suits their needs without undermining the quality of the content.

People use your interface in different situations. Make sure your interface delivers a valuable experience to people regardless of their circumstances.

Use familiar conventions and apply them consistently.

Ensure people are in control. People should be able to access and interact with content in their preferred way.

Consider providing different ways for people to complete tasks, especially those that are complex or non standard.

Help users focus on core tasks, features, and information by prioritising them within the content and layout.

Consider the value of features and how they improve the experience for different users.

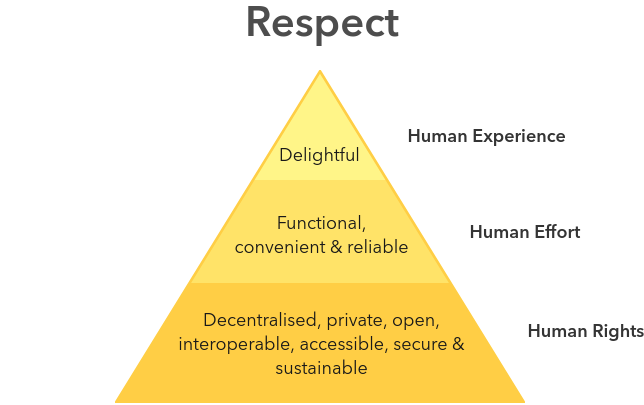

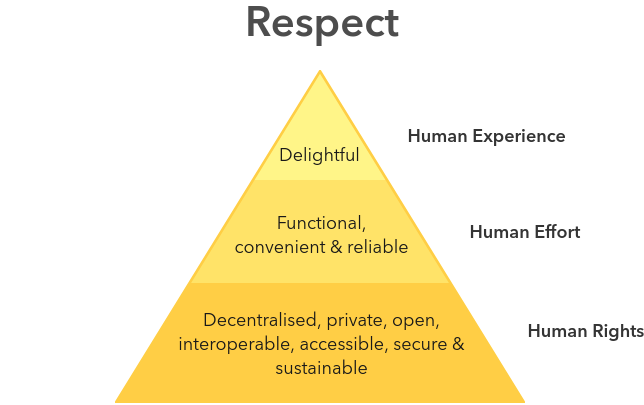

Human Experience

Technology that respects human experience is beautiful, magical, and delightful.

It just works. It’s intuitive. It’s invisible. It recedes into the background of your life. It gives you joy. It empowers you with superpowers. It puts a smile on your face and makes your life better.

Human Effort

Technology that respects human effort is functional, convenient, and reliable.

It is thoughtful and accommodating; not arrogant or demanding. It understands that you might be distracted or differently-abled. It respects the limited time you have on this planet.

Human Rights

Technology that respects human rights is decentralised, peer-to-peer, zero-knowledge, end-to-end encrypted, free and open source, interoperable, accessible, and sustainable.

It respects and protects your civil liberties, reduces inequality, and benefits democracy.

Personal technology are everyday things that people use to improve the quality of their lives. As such, in addition to being functional, secure, and reliable, they must be convenient, easy to use, and inclusive. If possible, we should aim to make them delightful.

Small technology is made by humans for humans. They are not built by designers and developers for users. They are not built by Western companies for people in African countries. If our tools specifically target a certain demographic, we must ensure that our development teams reflect that demographic. If not, we must ensure people from a different demographic can take what we make and specialise it for their needs.

A tool respects your privacy only if it is private by default. Privacy is not an option. You do not opt into it. Privacy is the right to choose what you keep to yourself and what you share with others. “Private” (i.e., for you alone) is the default state of small technologies. From there, you can always choose who else you want to share things with.

Zero-knowledge tools have no knowledge of your data. They may store your data, but the people who make or host the tools cannot access your data if they wanted to.

Examples of zero-knowledge designs are end-to-end encrypted systems where only you hold the secret key, and peer-to-peer systems where the data never touches the devices of the app maker or service provider (including combinations of end-to-end encrypted and peer-to-peer systems).

Peer-to-peer systems enable people to connect directly with one and another without a person (or more likely a corporation or a government) in the middle. They are the opposite of client/server systems, which are centralised (the servers are the centres).

On peer to peer systems, your data – and the algorithms used to analyze and make use of your data – stay on devices that you own and control. You do not have to beg some corporation to not abuse your data because they don’t have it to begin with.

Most people’s eyes cloud over when technology licenses are mentioned but they’re crucial to protecting your freedom.

Small Technology is licensed under Copyleft licenses. Copyleft licenses stipulate that if you benefit from technology that has been put into the commons, you must share back (“share alike”) any improvements, changes, or additions you make. If you think about it, it’s only fair: if you take from the commons, you should give back to the commons. That’s how we cultivate a healthy commons.

Interoperable systems can talk to one another using well-established protocols. They’re the opposite of silos. Interoperability ensures that different groups can take a technology and evolve it in ways that fit their needs while still staying compatible with other tools that implement the same protocols. Interoperability, coupled with share alike licensing, helps us to distribute power more equally as rich corporations cannot “embrace and extend” commons technology, thereby creating new silos.

Interoperability also means we don’t have to resort to colonialism in design: we can design for ourselves and support other groups who design for themselves while allowing all of us to communicate with each other within the same global network.

Being inclusive in technology is ensuring people have equal rights and access to the tools we build and the communities who build them, with a particular focus on including people from traditionally marginalised groups. Accessibility is the degree to which technology is usable by as many people as possible, especially disabled people

Small Technology is inclusive and accessible.

With inclusive design, we must be careful not to assume we know what’s best for others, despite us having differing needs. Doing so often results in colonial design, creating patronising and incorrect solutions.

Substitutability

The key criterion is that users are able to substitute a service by another services if they wish. This gives users control as they can take their data and move to a service which better serves them or even run the service themselves.

Transparency

Transparency is important, so that users can judge what the service does and how that affects their computing. Transparency of terms of use is especially important. But also transparency about what is done with the data, where it’s stored and processed.

Privacy

Services need to respect the privacy of users and give them ultimate control about what happens with their data. Personal data should only stored when necessary, and it has to be documented what is happening with it. Users need to be able to leave a service and have all their data removed.

Security

When running Free Software yourself, you have control about the security of the software, you can inspect the code, analyze it, and fix issues, when necessary. For services this is not possible. They need to establish trust that they are operating in a secure way and are protecting the data of their users. They should use encryption where possible. They need to document procedures how to identify and fix security issues. They need to communicate security issues clearly to their users.

Fair model of operation

Paying for a service is ok. Running web services can be costly, and users paying for their share of the costs is a fair model. Creating artificial restrictions as pay barriers, especially to the users own data, is not fair. A user should never be forced to pay for getting access to their own data. A fair web service should also not use third party advertisements, because that introduces tracking of users by third parties, and the users loses control about this data. Users also lose control about what is shown along their own data.

Control over user data access

Data explicitly and willingly uploaded by a user should be under the ultimate control of the user. Users should be able to decide whom to grant direct access to their data and with which permissions and licenses such access should be granted.

Knowledge of how the data is stored

When the data is uploaded to a specific service provider, users should be informed about where that specific service provider stores the data, how long, in which jurisdiction the specific service provider operates, and which laws apply.

Freedom to choose a platform

Users should always be able to extract their data from the service at any time without experiencing any vendor lock-in. Open standards for formats and protocols are necessary to guarantee this.

- own your data

- Use & publish visible data for humans first, machines second

- Make what you need / scratch your own itch Make tools, templates, etc. for yourself first, not for all of your friends or ”everyone“.

- Use what you make / selfdogfood Whatever you build you should actively use.

- Document your processes, ideas, designs and code

- Open source your stuff!

- UX before plumbing / UX and design is more important than protocols, formats, data models, schema etc.

- Modularity / build platform agnostic platforms designing and building modular code and services composed of pieces that can be swapped to reduce dependencies on a particular device, language, API, storage model, or platform

- Longevity keeping your data as future-friendly and future-proof as possible

- Plurality encourage and embrace a diversity of approaches & implementations

- Have fun

Aber wäre sehr wünschenswert!

Aber wäre sehr wünschenswert!